Semantic Image Search from Multiple Query Images

Introduction

The goal of any Image Retrieval system is to retrieve images from a large visual corpus that are similar to the input query. To date, most Image Retrieval systems (including commercial search engines) base their search on a single image input query.

In this work, we follow a different approach for multiple query image inputs, since the input images are employed jointly to extract underlying concepts common to the input images. The input images do not need to be part of the same concept. In fact, different input image either enriches, amplifies, or reduces the importance of one descriptive aspect of the multiple significances that any image inherently has.

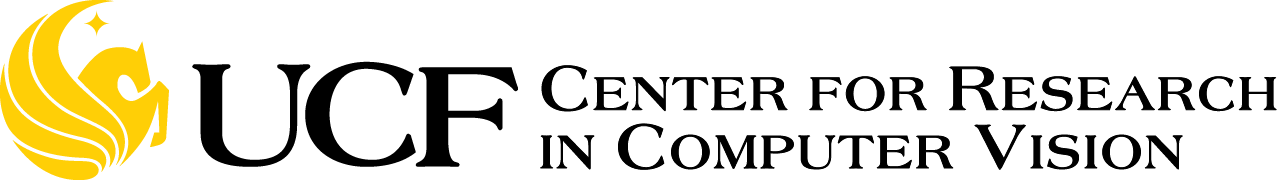

(a) Three input query. (b) Retrieved images for our system

Consider the example from previous figure. Three input images are used to query the system. The first input image is a milk bottle, the second contains a piece of meat, and the third contains a farm. These three images are dissimilar from a visual point of view. However, conceptually, they could be linked by the underlying concept "cattle", since farmers obtain milk and meat from cattle. These types of knowledge--based conceptual relations are the ones that we propose to capture in this new search paradigm.

The use of multiple images as input queries in real scenarios is straightforward, especially when mobile and wearable devices are the user interfaces. Consider for instance a wearable device. In this case, pictures can be passively sampled and used as query inputs of a semantic search to discover the context of the user. Once meaningful semantic concepts are found, many specific applications can be built, including personalization of textual search, reduction of the search space for visual object detection, and personalization of output information such as suggestions, advertising, and recommendations.

The utility of the proposed search paradigm is enhanced when the user has constructed an unclear search or lacks the knoledge to describe it, but does have some idea of images of isolated concepts.

For example, consider a user looking for ideas for a gift. While walking through the mall, the user adds some pictures of vague ideas for the gift. Based on the provided images, semantic concepts are found and used to retrieve images of gift suggestions. Another example of this type of application is retrieving image suggestions from a domain--specific knowledge area (painting, cooking, etc.), based on the user's more general visual inputs, where the user does not have specialized knowledge in the specific area. Hence, given a couple of paints that you like as input, the semantic search could find other images for you that share similar common concepts.

Method

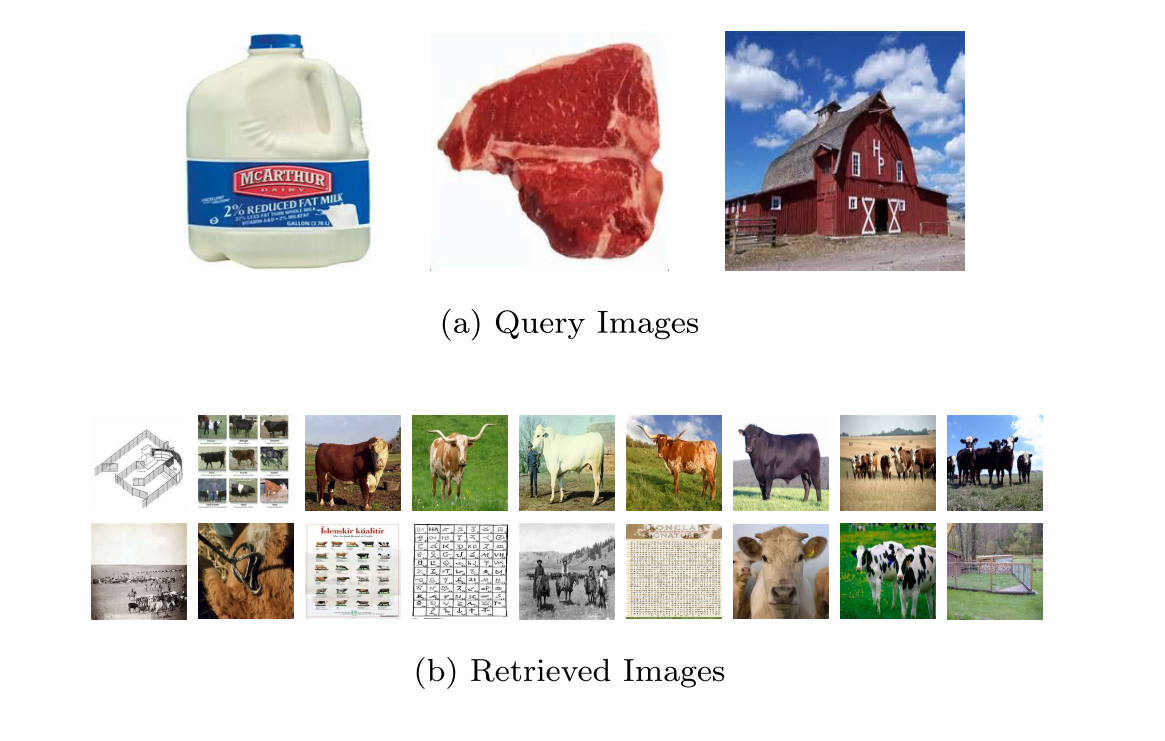

A description of our method is depicted in the following figure.

Description of our method.

Initially, each one of the query images Ii is processed individually to retrieve candidate images, based on the visual similarity, from the retrieval dataset. Each query image Ii produces a set of k nearest neighbor candidates, denoted as Cij, where j goes from 1 ... k .

All of the images from the dataset used for retrieval are assumed to have a dual representation: a visual representation given by a global image descriptor and a textual descriptor represented by a histogram of all of the texts describing the image. Hence, every candidate image Cij has an associated textual descriptor represented by a word histogram that serves to link the visual representation with the conceptual representation.

Candidate images that are visually closer to the query image have a higher impact in the text representation of the query image. The most representative text words that describes the query images, are processed using Natural Language Processing (NLP) techniques to discover new words that share conceptual similarity.

Afterwards, the histogram summation of the weighted word representations of the candidate images Cij and the aggregation of bins for the discovered words produces a histogram that represents the queries of the search jointly. Later, cosine distance between the tf--idf textual representation of the database images and the joint search representation is performed to retrieve images. Finally, a re-ranking is performed to privilege images with high visual similarity to any of the query images.

Results

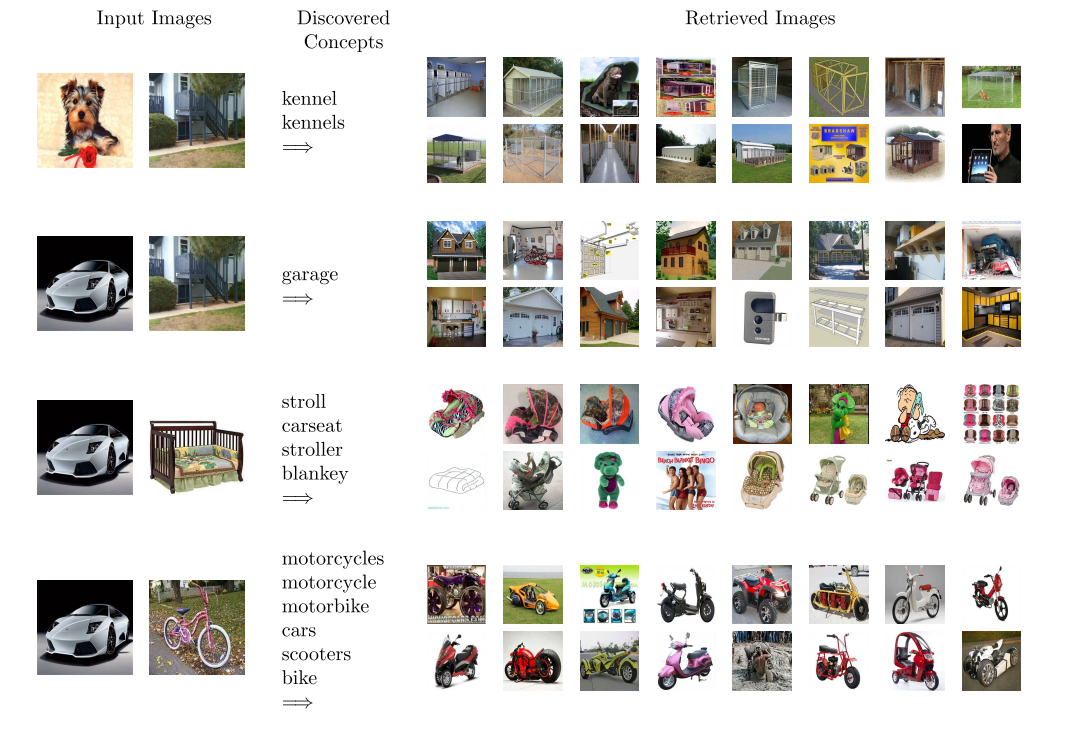

Some qualitative results are show in in the following figure:

Examples of image retrieval using two input images. First column shows the input images, second column shows the discovered words conceptually shared by the input images, and third column shows the retrieval after visual re-raking.

We performed experiments to evaluate our system for a set of 101 pairs (N=2) of image inputs. The definition of the input pairs of images was performed with the help of semantic maps downloaded from the internet.

For each pair of available query images, we asked several users to rate the top 25 retrieved images of a pair of query images. They were then asked to provide a binary answer to the following question: Is the retrieved image conceptually similar to both input images or not?

Based on the user ratings, we calculated the mean accuracy of the retrieved images from the 101 pairs of query images. Accuracy is reported for the top X retrieved images, with X ranging from 5 to 25 in intervals of 5.

The baseline method is defined as the image retrieval performed from a joint search representation given by the summation of the individual input textual representations without the addition of words conceptually shared by the input images.

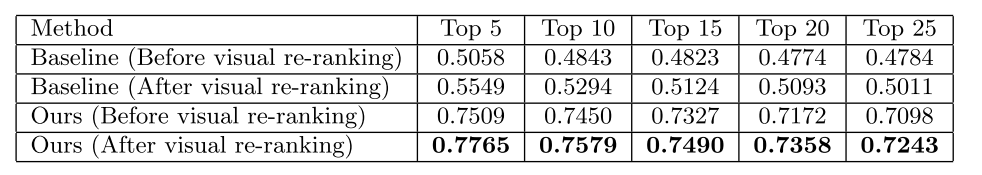

Table below presents the results of accuracy of our method and the baseline.

Mean accuracy of the retrieved images acording to user ratings in 101 pairs of query images. Results are showed at different top retrieval levels.

Demo

A live demo version of the project can be found at:http://crcv.ucf.edu/semanticsearch/exampleRelated Publications

Gonzalo Vaca-Castano, Mubarak Shah, Semantic Image Search from Multiple Query Images , ACM Multimedia Conference, 2015.Gonzalo Vaca-Castano Finding the Topic of a Set of Images , arXiv:1606.07921.